You may soon have to show your ID on Discord.

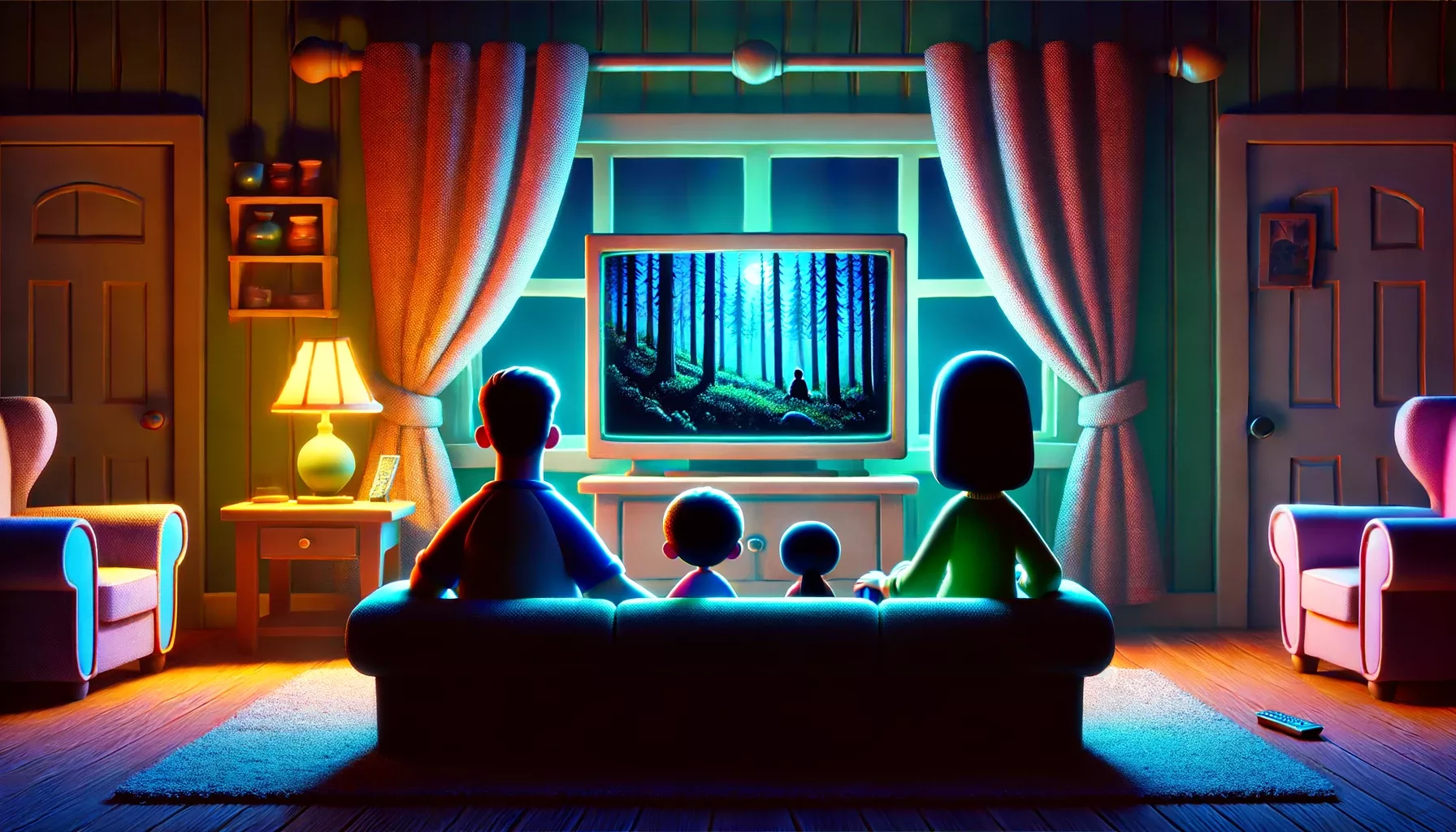

Discord has long been more than just a platform for gamers. Today, there’s a server for almost any niche interest imaginable, where people can exchange ideas, discuss topics, or simply follow along. Now, however, Discord is making a noticeable change to this open structure: the platform is rolling out age verification worldwide.

How Discord Wants To Check Users’ Ages

Discord has announced plans to introduce age verification globally. Users will have several ways to prove their age and can choose whether they want to use a facial scan or an official ID. Additional options are expected to be added in the future.

Even before this age verification is enforced, Discord plans to run detection software in the background. The system analyzes certain user activities to determine whether an account likely belongs to an adult. Private information, such as direct messages, is explicitly not meant to be evaluated.

If the system cannot clearly determine whether an account belongs to an adult, it is automatically classified as underage. In that case, access to age-restricted servers is denied, and messages from unknown users are more heavily filtered.

Age verification itself is nothing new for Discord. The platform introduced similar measures in the United Kingdom and Australia last year, and these checks are now set to be expanded globally.

However, Discord does not want to rely on age checks alone to protect children and teenagers. The company has also announced the creation of a so-called Teen Council. This body is expected to consist of ten to twelve members and is meant to help make Discord more youth- and child-friendly overall, while also improving the safety of minors on the platform. As the name suggests, the council will be made up of teenagers themselves. Anyone between the ages of 13 and 17 can apply to join the Teen Council until March 1.

Balancing Youth Protection And Privacy

As understandable as the move toward greater child protection may be, it nevertheless raises questions about privacy and data protection. The background detection software in particular has drawn criticism. While Discord has stated that no private information is being analyzed, users are still automatically reviewed by software.

The central question, therefore, is not whether child protection is fundamentally sensible, but which measures can still be considered ethically acceptable. Whether Discord has found the right balance between protecting minors and respecting user privacy remains to be seen. What is clear, however, is that the introduction of age verification has reignited the debate over age limits on social media platforms.

What do you think? Let us know in the comments!